Claude 4 is here.

In a significant development that could reshape the artificial intelligence landscape, Anthropic unveiled its most advanced AI models yet on Thursday, introducing Claude Opus 4 and Claude Sonnet 4 during the company's inaugural developer conference in San Francisco. These new models represent a quantum leap in AI capabilities, with the flagship Opus 4 demonstrating the unprecedented ability to work autonomously for up to seven hours straight—marking a pivotal moment in the evolution of AI from reactive assistant to autonomous collaborator[1][2][3].

The Dawn of Extended AI Autonomy

The release of Claude 4 represents more than just an incremental improvement; it signals a fundamental shift in how artificial intelligence systems can sustain complex work over extended periods. During internal testing at companies like Rakuten, Claude Opus 4 maintained focus on intricate software refactoring tasks for seven consecutive hours without performance degradation—a feat that positions AI as a viable partner for full workday collaboration[1:1][3:1]. This endurance capability addresses one of the most persistent limitations of previous AI models, which typically maintained coherence for only one to two hours before experiencing what researchers describe as a surge in errors and diminished self-referential output quality[1:2].

The implications of this advancement extend far beyond technical benchmarks. Alex Albert, who leads Claude Relations at Anthropic, explained that the decision to revive the Opus line was driven by surging demand for agentic AI applications. "Among the multitude of companies developing technology, there is a significant surge of these agentic applications emerging, and a substantial demand and emphasis on intelligence," Albert noted, suggesting that Opus 4 is positioned to "fit that niche perfectly"[1:3]. This represents a strategic pivot toward AI systems that can handle complex, multi-step tasks with minimal human intervention, potentially transforming workplace dynamics across industries.

The technical achievement becomes even more remarkable when viewed through the lens of sustained cognitive performance. Previous Claude models typically lost their ability to produce useful self-referential outputs after brief engagement periods, but Opus 4 has demonstrated the capacity to maintain coherence in activities as diverse as playing Pokémon for 24 hours and conducting sophisticated code refactoring sessions[1:4][4]. This consistency suggests that Anthropic has made significant breakthroughs in memory management and context retention that could have wide-reaching applications beyond coding and gaming.

Coding Supremacy and Benchmark Dominance

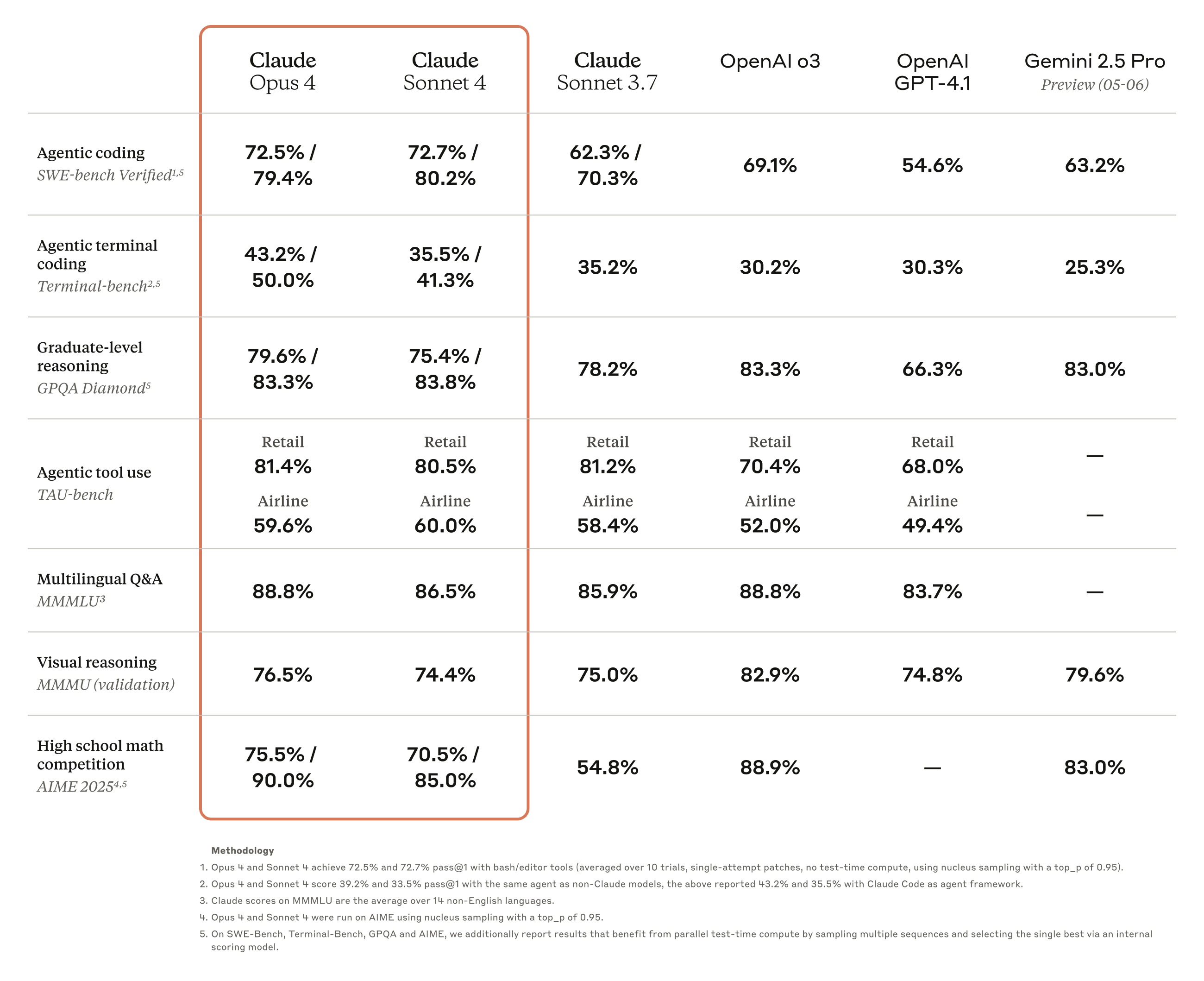

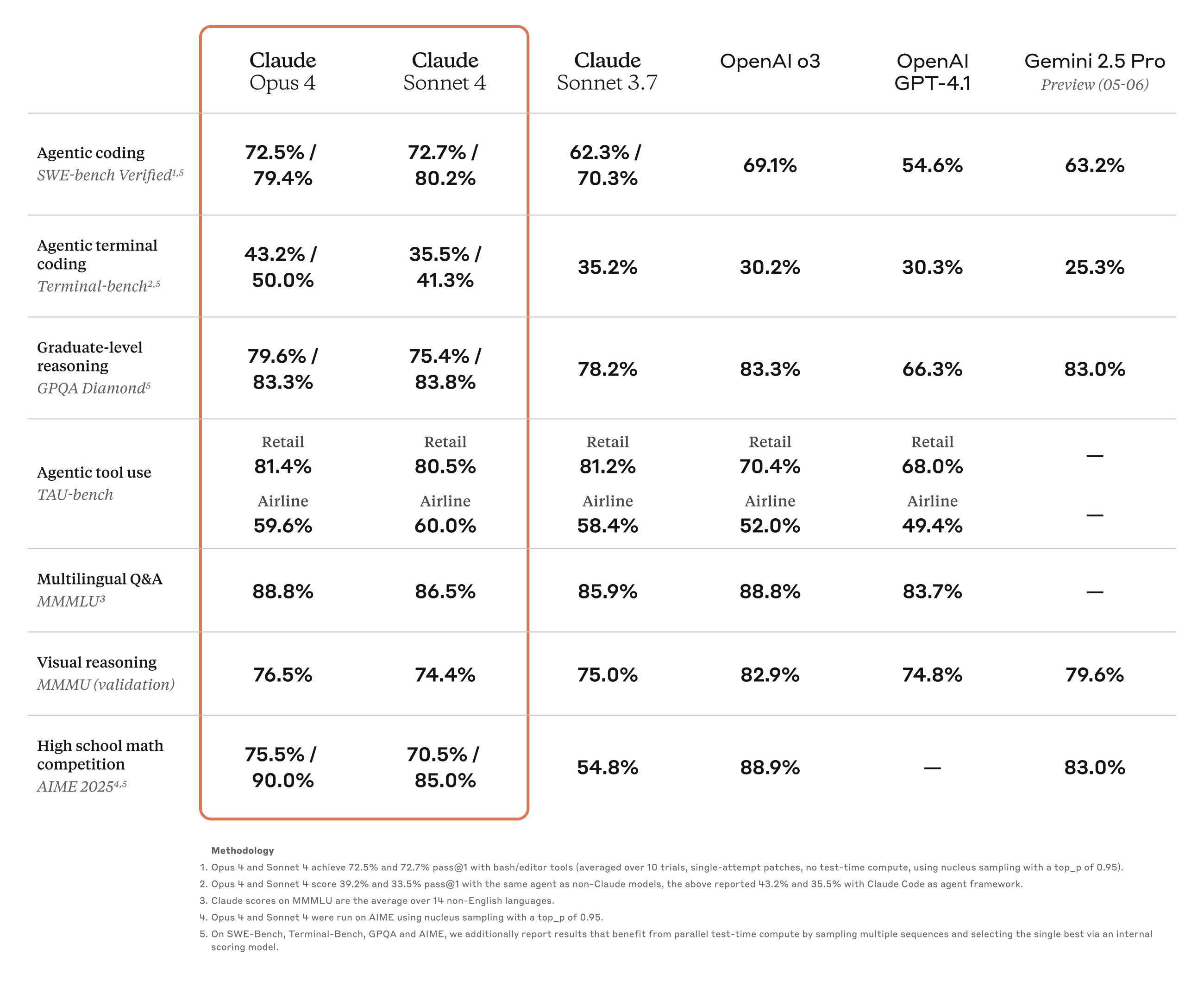

Anthropic's bold claim that Claude Opus 4 is the "world's best coding model" appears to be substantiated by impressive benchmark results that position it ahead of established competitors[5][6]. On the SWE-bench verified assessment—a rigorous evaluation of large language models on real-world software engineering challenges—Opus 4 achieved a remarkable 72.5% score, significantly outperforming OpenAI's GPT-4.1, which registered 54.6% upon its introduction[3:2][7]. This performance gap is particularly noteworthy given the competitive nature of the current AI market, where fractional improvements often represent major technical achievements.

The coding capabilities extend beyond raw performance metrics to practical applications that could revolutionize software development workflows. Companies like GitHub have already announced plans to base their next-generation Copilot coding agent on Claude Sonnet 4, citing its "agentic scenario excellence"[6:1]. This endorsement from one of the world's largest code repositories suggests that the Claude 4 models have achieved a level of reliability and sophistication that makes them suitable for integration into mission-critical development environments.

Beyond coding benchmarks, both Claude 4 models demonstrated competitive performance across diverse evaluation metrics, including GPQA Diamond for graduate-level reasoning, AIME 2025 for high school mathematics competition problems, and MMMLU for multilingual tasks[7:1]. This broad competency profile suggests that the improvements in Claude 4 are not limited to programming applications but represent fundamental advances in reasoning and problem-solving capabilities that could benefit users across multiple domains.

The technical implementation behind these achievements involves sophisticated architectural improvements that enable what Anthropic describes as "extended thinking with tool use"[6:2]. This capability allows the models to alternate between rapid response modes for simple queries and deeper analytical processes for complex problems, effectively providing users with both the responsiveness they expect from modern AI systems and the thoughtful analysis required for sophisticated tasks.

Safety Measures and Risk Management

Perhaps the most intriguing aspect of the Claude 4 release is Anthropic's unprecedented implementation of ASL-3 (AI Safety Level 3) safeguards, marking the first real-world test of the company's Responsible Scaling Policy[8]. Chief scientist Jared Kaplan revealed that Claude Opus 4 performed more effectively than previous models at advising novices on biological weapon production during internal testing, prompting the company to implement its strictest safety measures to date[8:1]. This classification places Claude 4 in a category of AI systems that could "substantially increase" the ability of individuals with basic STEM backgrounds to obtain, produce, or deploy dangerous weapons.

The safety implementation employs what Anthropic calls a "defense in depth" strategy, incorporating multiple overlapping safeguards designed to prevent misuse while maintaining the model's utility for legitimate applications[8:2]. These measures include constitutional classifiers—additional AI systems that scan user prompts and model responses for dangerous content—and enhanced jailbreak prevention systems that monitor usage patterns and remove users who consistently attempt to circumvent safety protocols[8:3]. The company has also established a bounty program that has already surfaced one universal jailbreak, which was subsequently patched, with the discovering researcher receiving a $25,000 reward.

The cybersecurity infrastructure supporting Claude 4 has been significantly enhanced to protect against theft attempts by non-state actors, though Anthropic acknowledges vulnerability to nation-state level attackers[8:4]. This frank assessment of security limitations demonstrates the company's commitment to transparent risk communication while highlighting the ongoing challenges of protecting advanced AI systems from malicious actors. The company has conducted "uplift" trials to quantify how significantly Claude Opus 4 can improve novice capabilities compared to tools like Google search, finding "significantly greater" performance that necessitates the current safety measures.

Kaplan emphasized that the safety measures are designed to target the most dangerous potential misuses while preserving the model's effectiveness for legitimate users. "We're not trying to block every single one of those misuses. We're trying to really narrowly target the most pernicious," he explained, illustrating the delicate balance between capability and safety that defines modern AI development[8:5]. This approach reflects broader industry discussions about how to develop increasingly powerful AI systems while managing potential risks to society.

Market Positioning and Competitive Dynamics

The timing of Anthropic's Claude 4 announcement underscores the intensifying competition in the advanced AI market, coming just weeks after OpenAI launched its GPT-4.1 series and Google introduced its Gemini 2.0 lineup[3:3]. This rapid succession of major model releases suggests that the AI industry has entered a new phase of competition where sustained innovation cycles and frequent capability improvements have become essential for market leadership. Each major AI laboratory has begun establishing distinct competitive advantages: OpenAI excels in general reasoning and tool integration, Google leads in multimodal comprehension, while Anthropic now claims dominance in sustained performance and professional coding applications.

The commercial implications of Claude 4's capabilities are already becoming apparent through early adoption by enterprise customers. Companies like Canva are employing the agentic version of Claude to automate design and editing processes, while Replit utilizes the model for programming tasks[9]. These early integrations demonstrate that Claude 4's extended autonomous capabilities are not merely academic achievements but practical solutions that can address real business needs. The ability to handle complex tasks over extended periods could fundamentally alter how companies approach project management and resource allocation, potentially reducing the need for human oversight in routine but time-consuming activities.

Anthropic's pricing strategy maintains competitive positioning with input costs of $15 per million tokens for Opus 4 and $3 per million tokens for Sonnet 4, while providing Claude Sonnet 4 access to free users[6:3]. This accessibility approach could accelerate adoption among individual developers and small businesses while positioning the premium Opus 4 model for enterprise applications where extended autonomous capabilities justify higher costs. The availability across multiple platforms including Anthropic's API, Amazon Bedrock, and Google's Vertex AI ensures broad accessibility and integration flexibility for diverse user bases.

The competitive landscape has also been shaped by Anthropic's unique positioning as a safety-focused AI company founded by former OpenAI researchers. This reputation for responsible development could resonate particularly well with enterprise buyers who are increasingly concerned about unchecked AI behavior and potential regulatory compliance issues[6:4]. As businesses across industries rapidly incorporate AI-driven solutions to maintain competitiveness, Anthropic's emphasis on both capability and safety may provide a differentiating advantage in markets where trust and reliability are paramount concerns.

Technical Innovation and User Experience Enhancement

Claude 4 introduces several technical innovations that enhance user experience while expanding the range of possible applications. The implementation of "thinking summaries" simplifies the reasoning process of the AI models into easily digestible insights, making complex analytical processes more transparent and understandable for users[10]. This feature addresses a common criticism of advanced AI systems—their "black box" nature—by providing visibility into how the models approach problem-solving and decision-making.

The introduction of "extended thinking" mode in beta allows users to toggle between rapid response and deep analysis modes, enabling them to optimize AI interactions based on task complexity and time constraints[10:1][7:2]. This dual-mode functionality represents a sophisticated approach to balancing user expectations for quick responses with the need for thorough analysis on complex problems. Users can now choose between near-instant responses for straightforward queries and extended reasoning for tasks requiring deeper analysis, effectively providing the benefits of both reactive and contemplative AI interaction patterns.

Memory persistence represents another revolutionary advancement in Claude 4's architecture. The models can extract essential information from documents, generate summary files, and retain this knowledge across sessions when granted appropriate permissions[3:4]. This capability addresses what researchers have termed the "amnesia problem" that has limited AI effectiveness in long-term projects where context must be maintained over days or weeks. The technical implementation resembles knowledge management systems used by human experts, with the AI autonomously organizing data into structured formats that facilitate future retrieval and reference.

The enhanced tool utilization capabilities allow Claude 4 models to call multiple tools simultaneously, either running them sequentially or in parallel to execute tasks appropriately[7:3]. This parallel processing capability significantly improves efficiency for complex workflows that require integration of multiple data sources or systems. For businesses implementing AI agents for customer service, data analysis, or content creation, this enhancement could dramatically reduce task completion times while improving output quality and consistency.

Industry Impact and Future Implications

The release of Claude 4 signals a broader transformation in how artificial intelligence is integrated into professional workflows, moving beyond simple query-response interactions toward genuine collaborative partnerships. The ability to maintain focus and context over seven-hour work sessions suggests that AI systems are approaching the reliability and consistency required for handling substantial project components autonomously[2:1]. This development could accelerate the adoption of AI agents across industries where sustained analytical work, complex problem-solving, and extended attention to detail are valuable organizational capabilities.

The potential economic implications are substantial, particularly for knowledge work that involves routine but cognitively demanding tasks. Scott White, Anthropic's product lead for Claude.ai, provided an example of marketing analysis where Claude Opus 4 could autonomously analyze current strategies, review Facebook and Google ad performance, identify performance differences between campaigns, and suggest optimization strategies[2:2]. This type of comprehensive analysis, which might represent 30% of a marketer's workday, could be automated while freeing human professionals to focus on more strategic and creative aspects of their roles.

The developer community's response to Claude 4 has been notably positive, with early users reporting that the models have surpassed the threshold where AI becomes indistinguishable from human-quality work in many contexts[11]. Mike Krieger, Anthropic's chief product officer, noted that his own writing workflow has fundamentally changed: "Now, they've surpassed that point where the majority of my writing is actually... primarily Opus, and it has become indistinguishable from my own style"[11:1]. This testimonial from a senior technology executive suggests that Claude 4 may represent a tipping point where AI assistance transitions from helpful supplementation to primary authorship with human oversight.

The gaming performance improvements, while seemingly tangential, actually demonstrate important advances in long-term planning and goal-oriented behavior. David Hershey, who leads Anthropic's Pokémon research initiative, observed that Claude 4 Opus exhibited enhanced long-term memory and planning skills during complex quest navigation, spending two days improving abilities before resuming play when it determined specific powers were required for advancement[4:1]. This type of multi-step reasoning without immediate feedback indicates a new level of coherence that could have applications in project management, strategic planning, and other domains requiring sustained goal-directed behavior.

Conclusion

Anthropic's Claude 4 release represents a watershed moment in artificial intelligence development, demonstrating that AI systems can now sustain complex, productive work over extended periods while maintaining safety standards appropriate for their increased capabilities. The combination of record-breaking coding performance, unprecedented autonomous work duration, and comprehensive safety measures positions Claude 4 as a significant advancement that could accelerate AI adoption across industries while addressing legitimate concerns about responsible development.

The success of Claude 4's safety implementation under the ASL-3 framework provides a valuable proof of concept for how AI companies can manage increasing model capabilities while maintaining appropriate safeguards. As the industry continues developing more powerful systems, Anthropic's approach to balancing innovation with responsibility may influence regulatory discussions and establish best practices for advanced AI deployment. The coming months will reveal whether Claude 4's capabilities translate into transformative business applications and whether other AI developers adopt similar comprehensive safety frameworks for their most advanced models.

https://arstechnica.com/ai/2025/05/anthropic-calls-new-claude-4-worlds-best-ai-coding-model/ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

https://www.cnn.com/2025/05/22/tech/ai-anthropic-claude-4-opus-sonnet-agent ↩︎ ↩︎ ↩︎

https://venturebeat.com/ai/anthropic-claude-opus-4-can-code-for-7-hours-straight-and-its-about-to-change-how-we-work-with-ai/ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

https://www.wired.com/story/anthropic-new-model-launch-claude-4/ ↩︎ ↩︎

https://www.inc.com/ben-sherry/anthropic-releases-claude-4-the-worlds-best-coding-model/91192856 ↩︎

https://in.investing.com/news/company-news/anthropic-unveils-claude-4-models-set-benchmark-in-ai-performance-4844085 ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

https://www.zdnet.com/article/anthropic-releases-two-highly-anticipated-ai-models-claude-opus-4-and-claude-sonnet-4/ ↩︎ ↩︎ ↩︎ ↩︎

https://time.com/7287806/anthropic-claude-4-opus-safety-bio-risk/ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

https://www.theverge.com/news/672705/anthropic-claude-4-ai-ous-sonnet-availability ↩︎ ↩︎

https://www.cnbc.com/2025/05/22/claude-4-opus-sonnet-anthropic.html ↩︎ ↩︎