The Silicon Lottery: Choosing the Right GPU for Your AI Adventures

In the ever-accelerating race of artificial intelligence, the humble graphics processing unit—once the domain of gamers and digital artists—has become the workhorse powering our algorithmic future. As someone who spent three weeks trying to run a simple image generation model on my ancient laptop only to watch it crash spectacularly (and repeatedly), I've learned the hard way that not all computing hardware is created equal when it comes to AI.

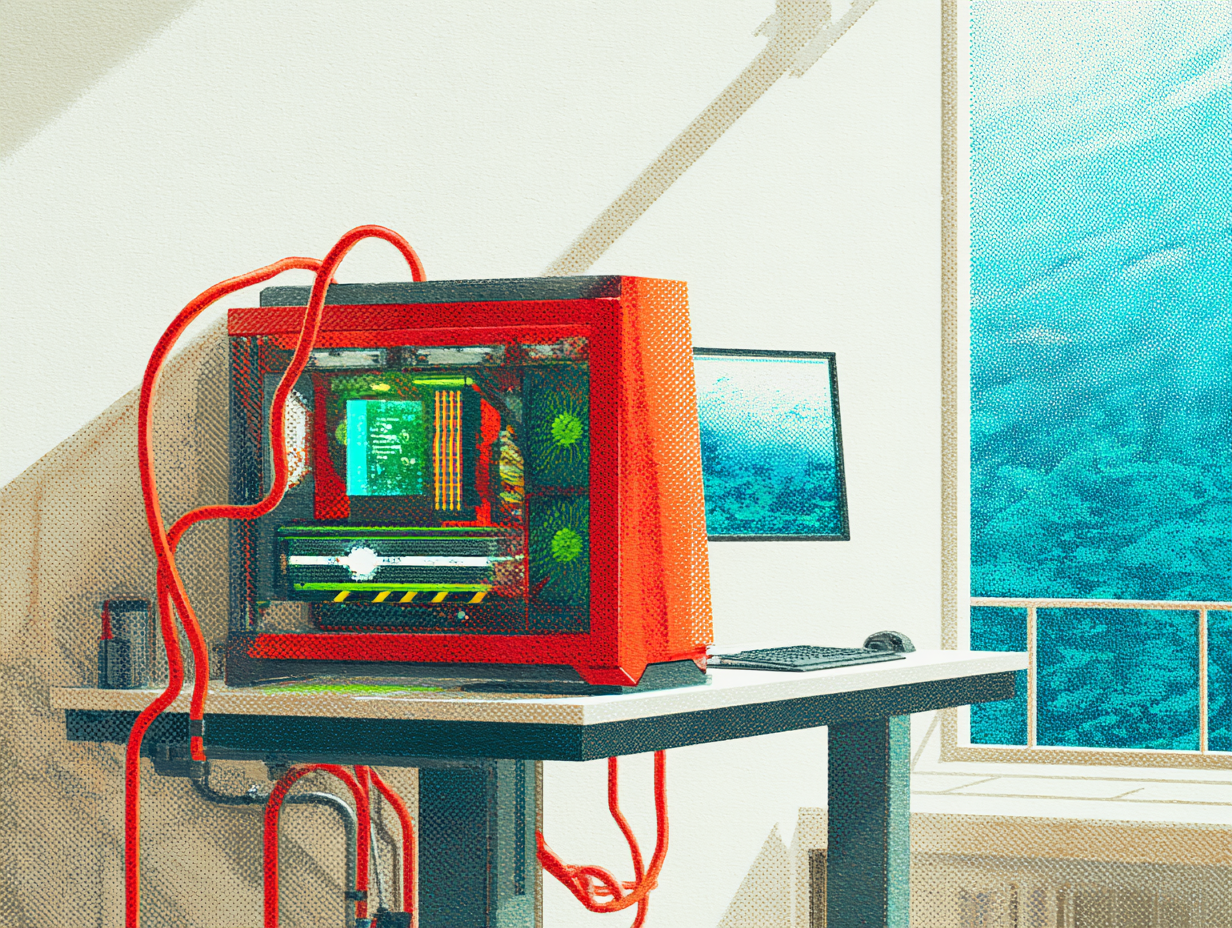

Whether you're looking to generate surrealist portraits of your cat as Renaissance royalty or fine-tune the next breakthrough language model in your spare bedroom, the GPU you choose will determine whether you spend your evenings marveling at AI's capabilities or staring at progress bars that seem frozen in time.

So let's embark on this silicon safari together, shall we? From budget-friendly options for the curious beginner to power-hungry beasts for the serious AI enthusiast, here's your guide to choosing the right GPU for bringing artificial intelligence into your home—without requiring a second mortgage or your own power plant.

The AI Hardware Landscape: Why GPUs Matter

Before diving into specific recommendations, let's understand why graphics cards have become the backbone of AI computing.

GPUs were originally designed to render complex 3D graphics for video games by performing thousands of calculations simultaneously. This parallel processing architecture—doing many simple calculations at once rather than a few complex ones sequentially—turns out to be perfect for the mathematical operations that power AI[1].

As Dr. Vivian Lien from Intel puts it: "The same computing power that enables smooth graphics also turns out to be really useful for AI"[1:1]. While your computer's CPU might have 8 or 16 cores, modern GPUs pack thousands of smaller cores that can tackle the matrix multiplications and tensor operations that form the foundation of machine learning.

This is why, when your friend brags about "training a model," they're likely not referring to obedience school for algorithms but rather the computationally intensive process of feeding data through neural networks—a process that can be dramatically accelerated with the right GPU.

Understanding the AI Terminology Landscape

Before we dive into specific GPU recommendations, let's decode some of the terminology you'll encounter in the AI world:

Inference vs. Training: Think of training as education and inference as application. Training is the resource-intensive process of teaching a model patterns from data, while inference is using that trained model to make predictions or generate content[2]. Training might take days or weeks on powerful hardware, while inference can often run on more modest setups.

Parameters: These are the adjustable values that a model learns during training. More parameters generally mean a more capable but resource-hungry model. When you hear about models with "billions of parameters," that's referring to their complexity and size[3].

Quantization: A technique that reduces the precision of numbers used in a model, making it smaller and faster while sacrificing some accuracy. When someone mentions "running a quantized model," they're using a compressed version that requires less memory and computing power[3:1].

TOPS (Tera Operations Per Second): A measurement of computational performance—specifically, how many trillion operations a processor can perform each second. Higher TOPS means faster AI processing[4].

Local Models: AI models that run entirely on your own hardware rather than in the cloud, offering privacy and eliminating internet dependency[2:1].

Fine-tuning: The process of taking a pre-trained model and further training it on specific data for specialized tasks. It's like taking a general education and adding specialized training[3:2].

Now that we have our terminology straight, let's explore which GPUs might be right for your AI journey, depending on your experience level and ambitions.

For Beginners: Dipping Your Toes in the AI Waters

If you're just starting your AI journey, you don't need to remortgage your house for a data center-grade GPU. Several affordable options can run basic models and let you experiment with AI applications.

NVIDIA RTX 4070 Ti SUPER: The Friendly Entry Point

The RTX 4070 Ti SUPER offers an excellent balance of performance and affordability for AI beginners. With 16GB of GDDR6X memory and 4th generation Tensor Cores, it provides enough power to run inference on most consumer-grade AI models[5].

Key Specifications:

- 16GB GDDR6X memory with 672 GB/s bandwidth

- 8,448 CUDA cores

- 4th Generation Tensor Cores

- 285W power consumption

- Price range: $800-$1,000

This GPU excels at running inference tasks like image generation through Stable Diffusion, text generation with smaller language models, and AI-enhanced creative workflows. The 16GB memory is sufficient for running most quantized language models locally using software like Ollama or LM Studio.

As one reviewer noted: "While you won't be training large LLMs on a 4070 Ti SUPER, it's perfect for running inference tasks, AI art generation, and dabbling in AI model development"[5:1].

AMD Radeon RX 7900 XTX: The NVIDIA Alternative

If you prefer AMD or want to avoid NVIDIA's ecosystem, the Radeon RX 7900 XTX offers comparable performance for beginners. With 24GB of GDDR6 memory, it provides ample space for running inference on most consumer AI models[6].

Key Specifications:

- 24GB GDDR6 memory

- 960 GB/s memory bandwidth

- Strong OpenCL performance

- Price range: Similar to RTX 4070 Ti SUPER

The 7900 XTX is particularly good for "AI-assisted graphics processing, OpenCL-based machine learning, and small-scale AI inference"[6:1]. While AMD's AI software ecosystem isn't as mature as NVIDIA's, the card's generous memory allocation makes it viable for running local AI models.

Intel Arc Pro B50: The Newcomer

Intel recently entered the AI GPU market with their Arc Pro series, including the B50 announced at Computex 2025[7][8]. While not as powerful as offerings from NVIDIA or AMD, these GPUs provide an alternative for basic AI tasks.

Key Specifications:

- Designed for AI inference and professional workstations

- Compatible with consumer and pro drivers on Windows

- Supports containerized software stack for AI deployment on Linux

- Lower price point than comparable NVIDIA options

Intel's Arc Pro GPUs are particularly suited for "small and medium-sized businesses that have been looking for targeted solutions"[8:1]. For beginners wanting to experiment with basic AI models without a significant investment, these provide a viable entry point.

Beginner Software Ecosystem

With these entry-level GPUs, beginners can comfortably run:

- Ollama: A user-friendly tool for running open-source language models locally

- LM Studio: An intuitive interface for downloading and running various AI models

- Automatic1111: The popular web UI for Stable Diffusion image generation

- Smaller Hugging Face models: Pre-trained models that don't require massive GPU memory

These GPUs will struggle with training custom models from scratch but are perfect for inference and fine-tuning smaller pre-trained models. They'll handle generating images, answering questions through local chatbots, and basic AI-assisted creative work without breaking a sweat.

For Intermediate Users: Serious AI Exploration

If you've moved beyond basic experimentation and want to work with larger models or try your hand at training and fine-tuning, you'll need more GPU muscle. These mid-range options provide significant AI capabilities without enterprise-level prices.

NVIDIA RTX 4080 SUPER: The Balanced Performer

The RTX 4080 SUPER strikes an excellent balance between performance and cost for intermediate AI enthusiasts. With 16GB of high-speed memory and advanced Tensor Cores, it handles most AI workloads comfortably[5:2].

Key Specifications:

- 16GB GDDR6X memory with 736 GB/s bandwidth

- 10,240 CUDA cores

- 4th Generation Tensor Cores with FP8 support

- 320W power consumption

- Price range: $1,000-$1,200

This GPU excels at "AI inference, content creation tools like Adobe's Firefly AI, and AI video upscaling"[5:3]. The 4th generation Tensor Cores provide significant acceleration for deep learning tasks, making it suitable for fine-tuning smaller models and running inference on larger ones.

NVIDIA RTX 4090: The Consumer Powerhouse

For intermediate users willing to invest more, the RTX 4090 represents the current pinnacle of consumer GPU performance for AI workloads[6:2][9][5:4].

Key Specifications:

- 24GB GDDR6X memory with 1008GB/s bandwidth

- Built on the Ada Lovelace architecture

- 4th Generation Tensor Cores

- 450W power consumption

- Price range: $1,500-$2,000

The RTX 4090 "delivers roughly 70% of an NVIDIA A100's effective AI performance at about 25% of the cost"[6:3], making it the value king for serious AI enthusiasts. Its generous 24GB memory allows running larger language models locally and handling more demanding image generation tasks.

One reviewer noted it's ideal for "fine-tuning smaller AI models, running multiple concurrent inference workloads, and local development of specialized models before production"[6:4].

AMD MI210: The Workstation Alternative

For intermediate users looking beyond NVIDIA, AMD's workstation-class MI210 offers substantial AI performance with generous memory capacity[6:5].

Key Specifications:

- 64GB of HBM2e memory

- Infinity fabric links for multi-GPU scaling

- Good for OpenCL-based AI applications

- Higher price point than consumer GPUs

The MI210 particularly shines in "multi-GPU configurations, with infinity fabric links enabling near-linear scaling across up to four cards"[6:6]. This makes it an interesting option for users who might want to expand their AI computing capacity over time.

Intermediate Software Ecosystem

With these more powerful GPUs, intermediate users can comfortably work with:

- Larger language models: Run models with 7B-13B parameters locally at full precision

- OpenWebUI: More advanced interfaces for working with AI models

- ConfyUI: Node-based interface for complex image generation workflows

- Fine-tuning: Customize pre-trained models on your own data

- Multiple models simultaneously: Run inference on several models at once

- Quantization experiments: Test different precision levels to optimize performance

These GPUs also enable working with NSFW content (where legal and ethical), as they provide enough power to run unrestricted models locally rather than relying on cloud services with content filters.

For Advanced Users: Professional AI Development

For those deeply invested in AI research, development, or production, consumer-grade GPUs may not suffice. These high-end options provide the computational power needed for serious AI work.

NVIDIA RTX 5090: The Next-Generation Flagship

The upcoming RTX 5090 (based on the Blackwell architecture) promises to be a game-changer for advanced AI users working from home[5:5].

Key Specifications:

- 32GB GDDR7 memory

- 5th Generation Tensor Cores

- Blackwell architecture

- Expected price: $1,999+

This next-generation GPU will excel at "next-gen AI workloads" and serve as the "prosumer king"[5:6]. The increased memory capacity and advanced architecture will make it ideal for training medium-sized models and running inference on larger ones.

NVIDIA RTX A5000: Professional-Grade Performance

For those requiring professional reliability and features, the NVIDIA RTX A5000 offers workstation-class performance[9:1].

Key Specifications:

- 24GB GDDR6 memory with ECC (error correction)

- Ampere Architecture CUDA Cores

- Third-Generation Tensor Cores

- NVLink support for multi-GPU setups

- 230W power consumption

The RTX A5000 provides features particularly valuable for professional AI work, including "ECC for error correction, ensuring reliability for memory-intensive workloads"[9:2]. Its NVLink capability allows combining two cards for a total of 48GB of memory, enabling work with larger models.

Multi-GPU Setups: Scaling Up Your AI Power

Advanced users often benefit from running multiple GPUs in a single system. This approach provides several advantages for AI workloads[10]:

Parallelism Strategies:

- Data Parallelism: Splitting data across multiple GPUs

- Tensor Parallelism: Dividing the AI model itself across GPUs

- Pipeline Parallelism: Breaking the process into sequential stages

Multi-GPU setups require careful consideration of interconnects:

- PCIe: Common but can bottleneck peer-to-peer GPU communication

- NVLink: NVIDIA's proprietary connection allowing fast direct memory access between GPUs

- InfiniBand: Enables ultra-low latency, high throughput between nodes in multi-server setups

As one expert notes: "Scaling across GPUs is the only practical path forward for serious model training"[10:1]. For home setups, two to four GPUs connected via NVLink (for NVIDIA cards) or Infinity Fabric (for AMD cards) can provide substantial performance gains for training and running multiple models simultaneously.

Power Considerations for Advanced Setups

Advanced GPU configurations demand serious attention to power requirements. High-end AI GPUs can draw substantial power:

- RTX 4090: 450W

- RTX A5000: 230W

- H100: Up to 700W

- Multiple GPU setups: 1,500W-3,000W total system power

As noted in recent research, "Each high-end GPU can draw 300–700W+" and "Multi-GPU setups may require 2–3kW per server rack"[10:2]. Home users should ensure their electrical systems can handle these demands and invest in appropriate cooling solutions to prevent thermal throttling.

The power consumption trend is increasing with each generation. For example, "Intel's upcoming hybrid AI processor, Falcon Shores, is expected to consume a staggering 1,500W of power per chip, the highest on the market"[11].

Advanced Software Ecosystem

With these powerful GPU configurations, advanced users can work with:

- Training custom models: Create specialized AI models from scratch

- Running large language models locally: Work with models featuring 30B+ parameters

- Advanced fine-tuning: Customize large models with specialized techniques

- Model merging: Combine different models to create new capabilities

- Distributed training: Spread workloads across multiple GPUs

- Research and development: Push the boundaries of what's possible with consumer hardware

Making Your Choice: Key Considerations

Now that we've explored options across experience levels, here are the key factors to consider when making your final decision:

Memory Capacity and Bandwidth

Memory is often the limiting factor for AI workloads. Larger models require more VRAM, and higher bandwidth improves performance. As a general guideline:

- 8-12GB: Sufficient for basic inference and smaller models

- 16-24GB: Good for most consumer AI applications and smaller training tasks

- 32GB+: Necessary for working with larger models and serious training

AI-Specific Hardware Acceleration

Modern GPUs include specialized hardware for AI workloads:

- Tensor Cores (NVIDIA): Accelerate matrix operations significantly compared to standard CUDA cores

- Matrix Cores (AMD): Similar to Tensor Cores but with less mature software support

- XMX Matrix Engines (Intel): Intel's equivalent technology in their Arc GPUs

These specialized cores can provide 5-10x performance improvements for AI tasks compared to standard GPU cores[6:7].

Software Ecosystem Compatibility

NVIDIA currently has the most mature software ecosystem for AI development, with frameworks like CUDA, TensorRT, and extensive library support. AMD and Intel are improving their offerings but still lag behind in compatibility with popular AI tools.

If you plan to use specific AI software, check its hardware requirements before purchasing. Some tools are optimized exclusively for NVIDIA GPUs.

Power and Cooling Requirements

AI workloads can push GPUs to their limits for extended periods. Ensure your power supply can handle the load and your cooling solution can maintain safe temperatures. Remember that multiple GPUs will multiply your power and cooling needs.

Future Expandability

If you think you might scale up your AI work, consider platforms that support multiple GPUs and efficient interconnects. Some motherboards support 2-4 GPUs, while specialized workstation motherboards can accommodate even more.

The Future of Home AI Computing

As we look ahead, several trends are shaping the future of AI computing at home:

- Increasing specialization: GPUs are evolving to include more AI-specific hardware, improving performance for these workloads

- Power efficiency challenges: As GPUs grow more powerful, managing their energy consumption becomes critical

- Democratization of AI: More affordable options are making AI development accessible to hobbyists and small businesses

- Local AI preference: Growing interest in running models locally for privacy, latency, and customization benefits

Intel's recent announcement of their Arc Pro B-Series GPUs specifically designed for AI workloads demonstrates this trend toward specialized consumer AI hardware[7:1][8:2]. Their "Project Battlematrix" workstation supporting up to eight Intel Arc Pro B60 GPUs shows how multi-GPU computing is becoming more accessible to advanced home users[8:3].

Conclusion: Finding Your AI Silicon Soulmate

Choosing the right GPU for AI work is less about finding the "best" option and more about finding the right match for your specific needs, budget, and ambitions.

For beginners, the RTX 4070 Ti SUPER or AMD Radeon RX 7900 XTX provide excellent entry points without overwhelming investment. Intermediate users will benefit from the additional power of the RTX 4080 SUPER or RTX 4090. Advanced users might consider professional-grade options like the RTX A5000 or multi-GPU setups.

Remember that the field is evolving rapidly—both hardware capabilities and software requirements continue to advance. What seems impossibly ambitious today might be routine tomorrow. The GPU that serves as your introduction to AI might eventually become part of a cluster as your projects grow more ambitious.

Whatever your choice, welcome to the fascinating world of home AI computing—where the once exclusive domain of research labs and tech giants is now accessible from your desk, limited only by your curiosity, creativity, and willingness to explain to your family why the electric bill suddenly doubled.

After all, as one wise AI researcher once told me, "The best GPU for AI is the one you can afford to buy and afford to run—preferably without causing a neighborhood power outage."

⁂

- https://kempnerinstitute.harvard.edu/news/graphics-processing-units-and-artificial-intelligence/ ↩︎ ↩︎

- https://cloud.google.com/discover/gpu-for-ai ↩︎ ↩︎

- https://www.jetlearn.com/blog/ai-concepts-for-kids-key-terms-explained-in-simple-language ↩︎ ↩︎ ↩︎

- https://telnyx.com/resources/gpu-architecture-ai ↩︎

- https://pcoutlet.com/parts/video-cards/best-gpus-for-ai-ranked-and-reviewed ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

- https://tensorwave.com/blog/gpu-for-ai ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

- https://newsroom.intel.com/client-computing/computex-intel-unveils-new-gpus-ai-workstations ↩︎ ↩︎

- https://www.techtimes.com/articles/310385/20250519/intel-targets-ai-creators-new-arc-pro-gpus-gaudi-3-accelerators-computex.htm ↩︎ ↩︎ ↩︎ ↩︎

- https://www.atlantic.net/gpu-server-hosting/top-10-nvidia-gpus-for-ai-in-2025/ ↩︎ ↩︎ ↩︎

- https://www.liquidweb.com/gpu/multi-gpu-setups/ ↩︎ ↩︎ ↩︎

- https://www.forbes.com/sites/bethkindig/2024/06/20/ai-power-consumption-rapidly-becoming-mission-critical/ ↩︎